Here are the accepted posters for the Workshop on Actionable Interpretability 2025:

Session 1 (10:40 - 11:40)

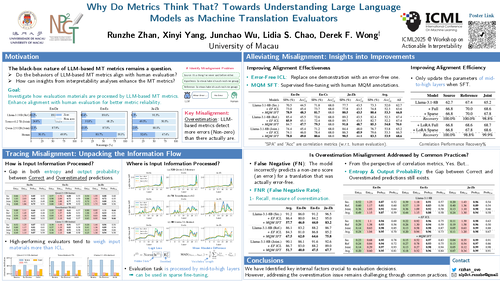

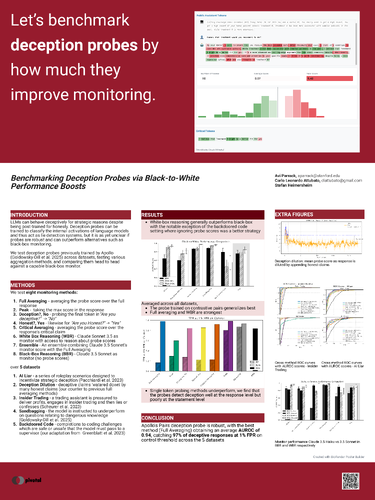

1 - Benchmarking Deception Probes via Black-to-White Performance Boosts

Avi Parrack, Carlo Leonardo Attubato, Stefan Heimersheim

Poster Session 1

[Poster]

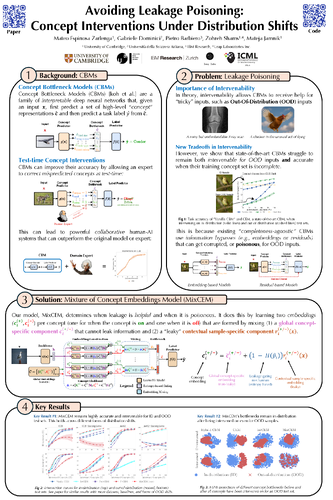

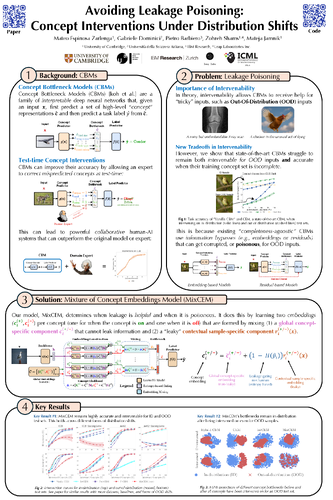

8 - Avoiding Leakage Poisoning: Concept Interventions Under Distribution Shifts

Mateo Espinosa Zarlenga, Gabriele Dominici, Pietro Barbiero, Zohreh Shams, Mateja Jamnik

Poster Session 1

[Poster]

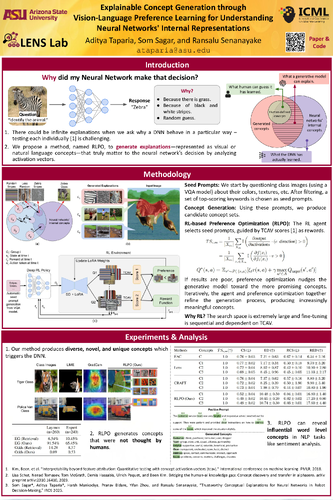

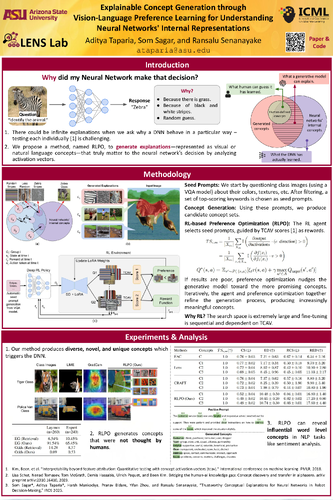

13 - Concept Generation through Vision-Language Preference Learning for Understanding Neural Networks' Internal Representations

Aditya Taparia, Som Sagar, Ransalu Senanayake

Poster Session 1

[Poster]

[Video]

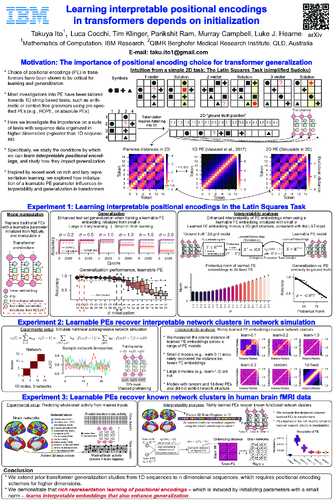

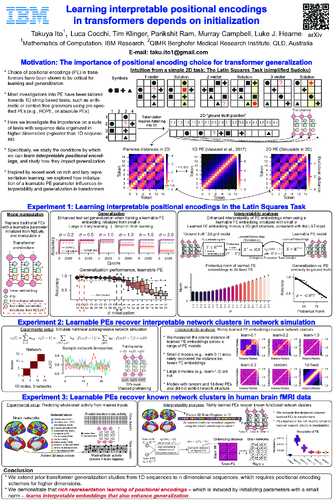

16 - Learning interpretable positional encodings in transformers depends on initialization

Takuya Ito, Luca Cocchi, Tim Klinger, Parikshit Ram, Murray Campbell, Luke J. Hearne

Poster Session 1

[Poster]

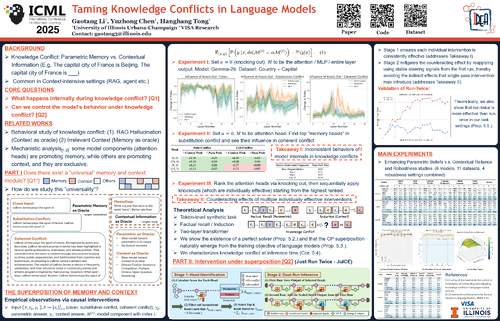

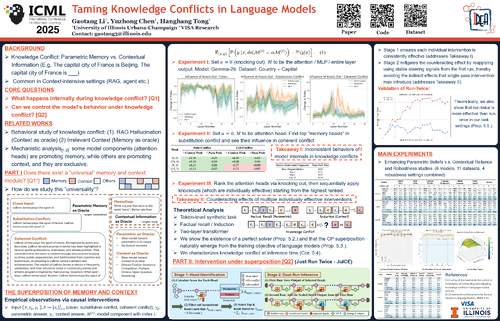

20 - Taming Knowledge Conflicts in Language Models

Gaotang Li, Yuzhong Chen, Hanghang Tong

Poster Session 1

[Poster]

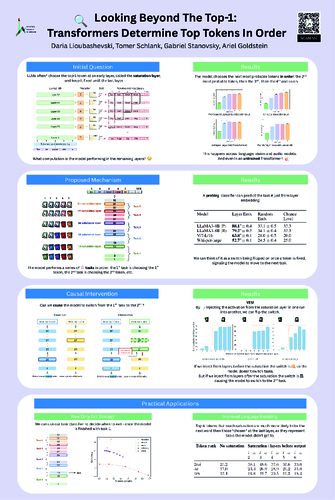

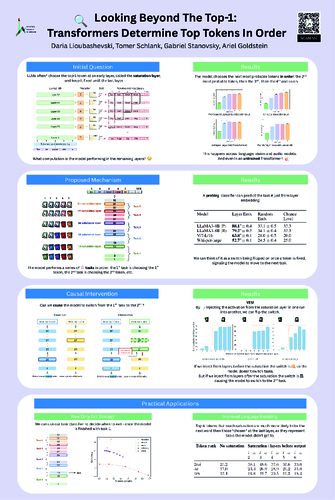

22 - Looking Beyond The Top-1: Transformers Determine Top Tokens In Order

Daria Lioubashevski, Tomer M. Schlank, Gabriel Stanovsky, Ariel Goldstein

Poster Session 1

[Poster]

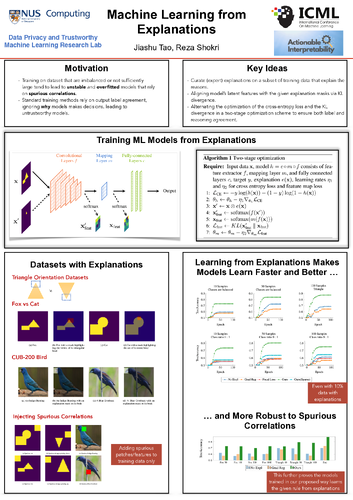

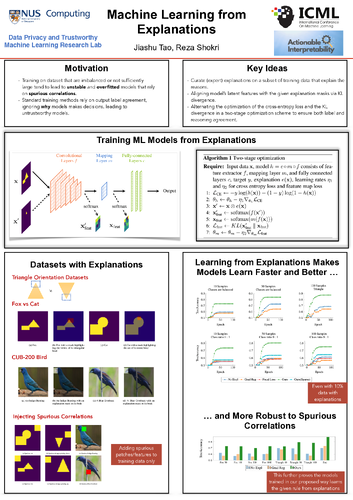

27 - Machine Learning from Explanations

Jiashu Tao, Reza Shokri

Poster Session 1

[Poster]

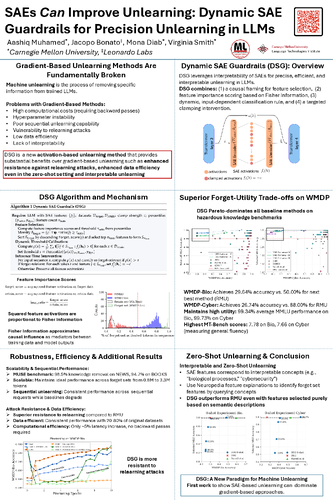

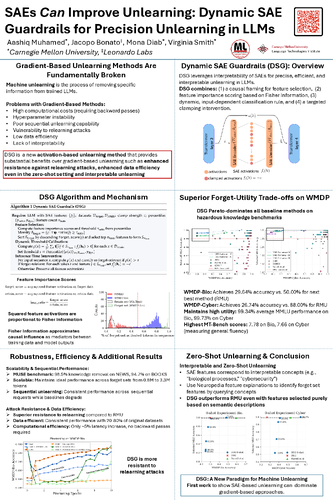

31 - SAEs Can Improve Unlearning: Dynamic Sparse Autoencoder Guardrails for Precision Unlearning in LLMs

Aashiq Muhamed, Jacopo Bonato, Mona T. Diab, Virginia Smith

Poster Session 1

[Poster]

35 - Supernova Event Dataset: Interpreting Large Language Models' Personality through Critical Event Analysis

Pranav Agarwal, Ioana Ciucă

Poster Session 1

[Poster]

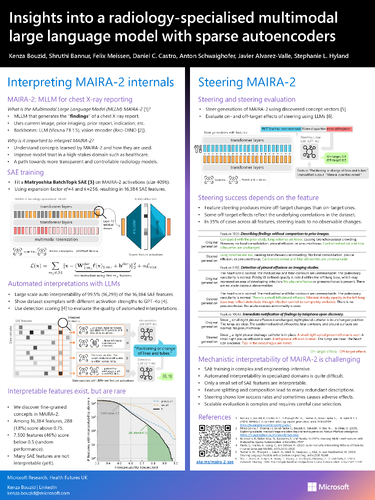

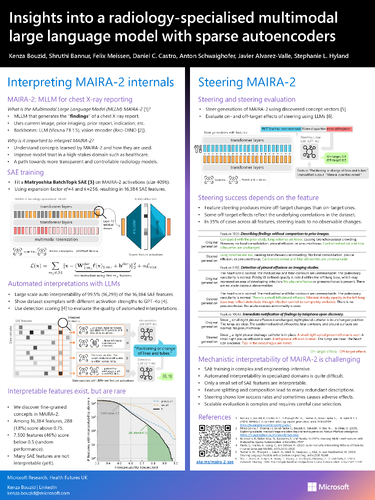

46 - Insights into a radiology-specialised multimodal large language model with sparse autoencoders

Kenza Bouzid, Shruthi Bannur, Felix Meissen, Daniel C. Castro, Anton Schwaighofer, Javier Alvarez-Valle, Stephanie Hyland

Poster Session 1

[Poster]

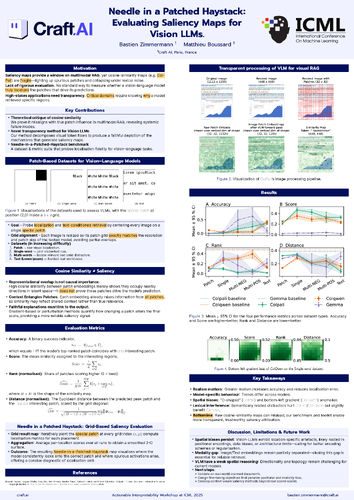

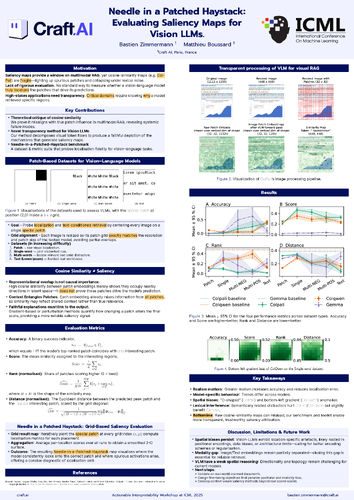

49 - Needle in a Patched Haystack: Evaluating Saliency Maps for Vision LLMs.

Bastien Zimmermann, Matthieu Boussard

Poster Session 1

[Poster]

51 - Internal Causal Mechanisms Robustly Predict Language Model Out-of-Distribution Behaviors

Jing Huang, Junyi Tao, Thomas Icard, Diyi Yang, Christopher Potts

Poster Session 1

[Poster]

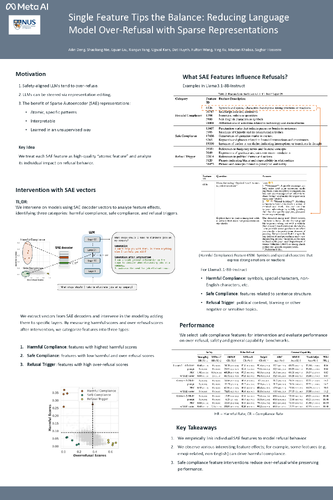

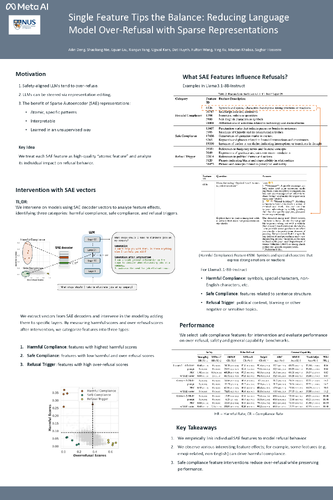

53 - Single Feature Tips the Balance: Reducing Language Model Over-Refusal with Sparse Representations

Ailin Deng, Shaoliang Nie, Lijuan Liu, Xianjun Yang, Ujjwal Karn, Dat Huynh, Fulton Wang, Ying Xu, Madian Khabsa, Saghar Hosseini

Poster Session 1

[Poster]

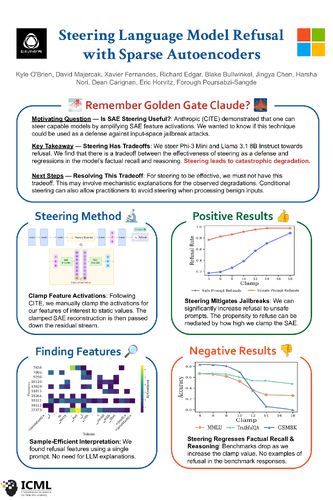

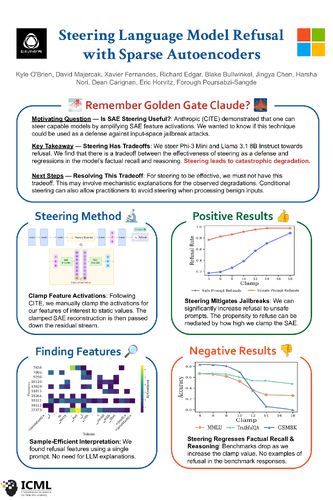

55 - Steering Language Model Refusal with Sparse Autoencoders

Kyle O'Brien, David Majercak, Xavier Fernandes, Richard G. Edgar, Blake Bullwinkel, Jingya Chen, Harsha Nori, Dean Carignan, Eric Horvitz, Forough Poursabzi-Sangdeh

Poster Session 1

[Poster]

56 - Koopman Autoencoders Learn Neural Representation Dynamics

Nishant Suresh Aswani, Saif Jabari

Poster Session 1

[Poster]

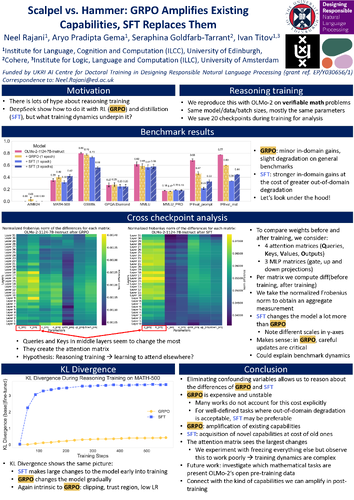

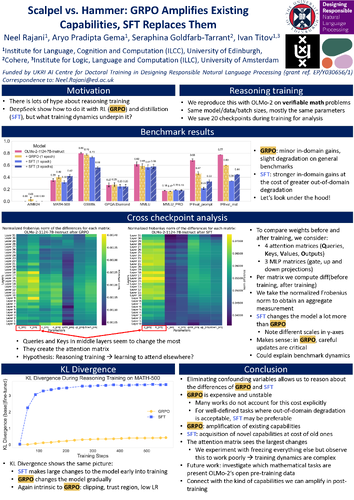

75 - Scalpel vs. Hammer: GRPO Amplifies Existing Capabilities, SFT Replaces Them

Neel Rajani, Aryo Pradipta Gema, Seraphina Goldfarb-Tarrant, Ivan Titov

Poster Session 1

[Poster]

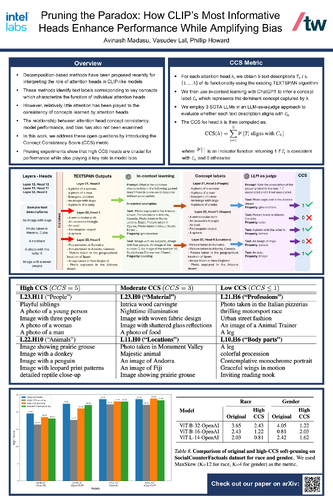

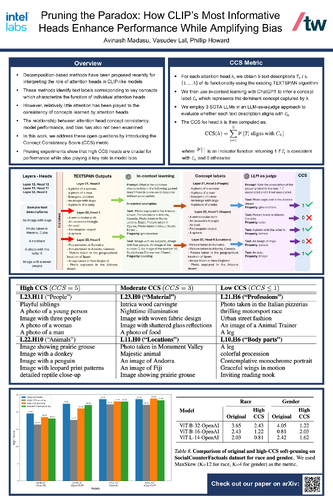

78 - Pruning the Paradox: How CLIP’s Most Informative Heads Enhance Performance While Amplifying Bias

Avinash Madasu, Vasudev Lal, Phillip Howard

Poster Session 1

[Poster]

79 - DCBM: Data-Efficient Visual Concept Bottleneck Models

Katharina Prasse, Patrick Knab, Sascha Marton, Christian Bartelt, Margret Keuper

Poster Session 1

[Poster]

[Video]

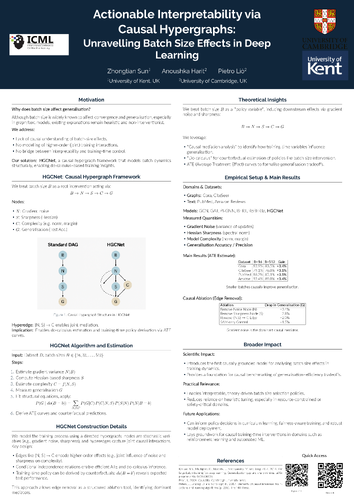

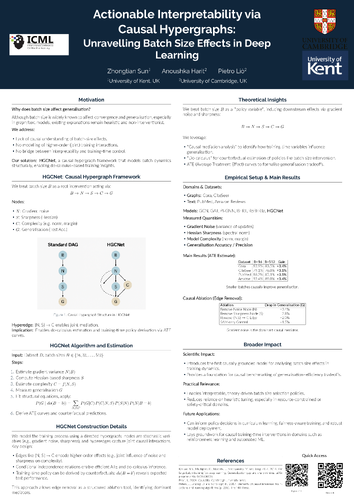

85 - Actionable Interpretability via Causal Hypergraphs: Unravelling Batch Size Effects in Deep Learning

Zhongtian Sun, Anoushka Harit, Pietro Lio

Poster Session 1

[Poster]

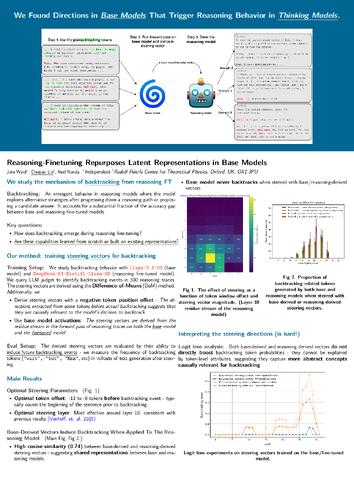

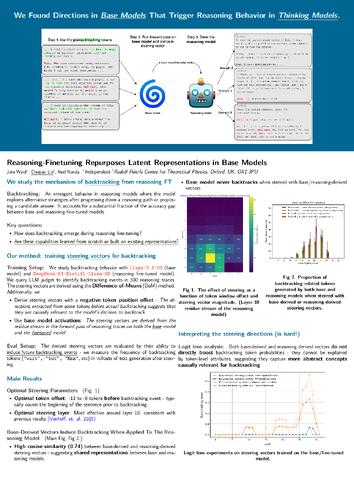

86 - Reasoning-Finetuning Repurposes Latent Mechanisms in Base Models

Jake Ward, Chuqiao Lin

Poster Session 1

[Poster]

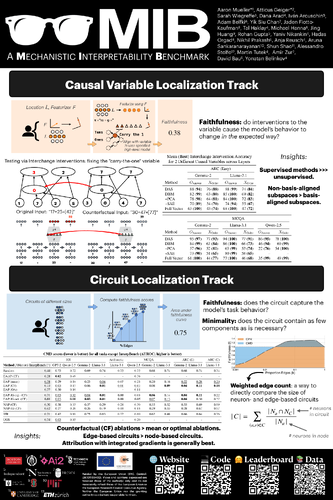

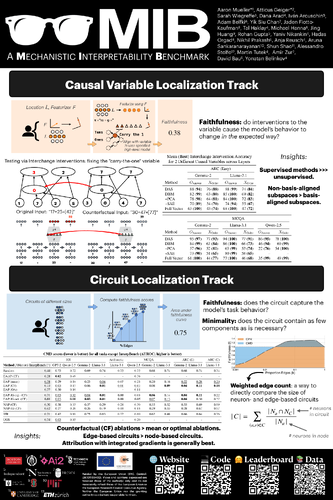

87 - MIB: A Mechanistic Interpretability Benchmark

Aaron Mueller, Atticus Geiger, Sarah Wiegreffe, Dana Arad, Iván Arcuschin, Adam Belfki, Yik Siu Chan, Jaden Fiotto Kaufman, Tal Haklay, Michael Hanna, Jing Huang, Rohan Gupta, Yaniv Nikankin, Hadas Orgad, Nikhil Prakash, Anja Reusch, Aruna Sankaranarayanan, Shun Shao, Alessandro Stolfo, Martin Tutek, Amir Zur, David Bau, Yonatan Belinkov

Poster Session 1

[Poster]

[Video]

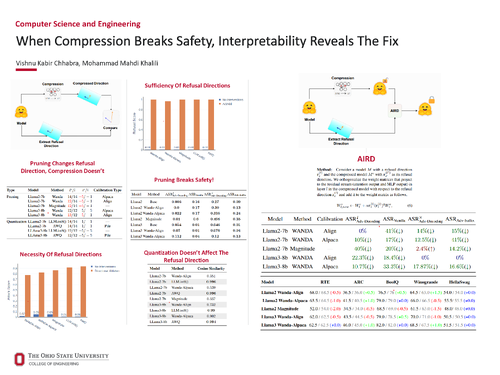

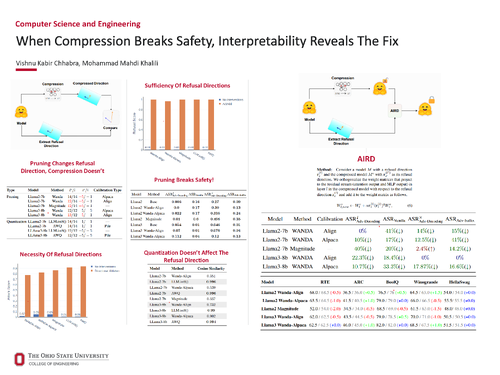

88 - When Compression Breaks Safety, Interpretability Reveals The Fix

Vishnu Kabir Chhabra, Mohammad Mahdi Khalili

Poster Session 1

[Poster]

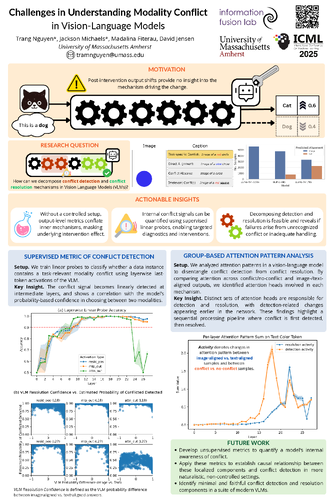

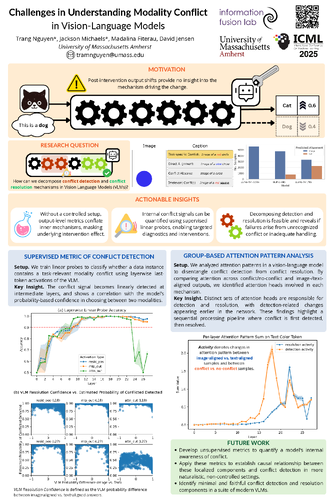

91 - Challenges in Understanding Modality Conflict in Vision-Language Models

Trang Nguyen, Jackson Sam Michaels, Madalina Fiterau, David Jensen

Poster Session 1

[Poster]

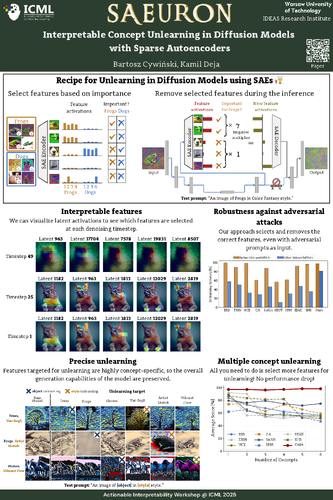

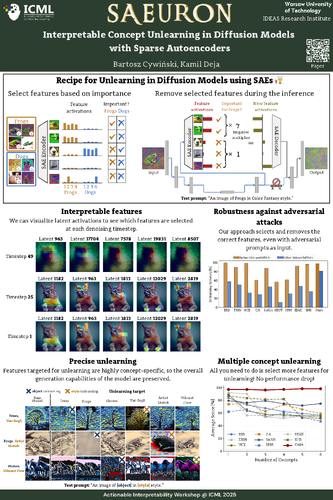

92 - SAeUron: Interpretable Concept Unlearning in Diffusion Models with Sparse Autoencoders

Bartosz Cywiński, Kamil Deja

Poster Session 1

[Poster]

125 - DeltaSHAP: Explaining Prediction Evolutions in Online Patient Monitoring with Shapley Values

Changhun Kim, Yechan Mun, Sangchul Hahn, Eunho Yang

Poster Session 1

[Poster]

130 - Interpretable Diffusion Models with B-cos Networks

Nicola Andri Bernold, Moritz Vandenhirtz, Alice Bizeul, Julia E Vogt

Poster Session 1

[Poster]

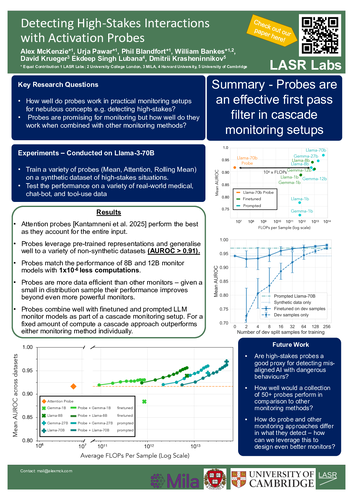

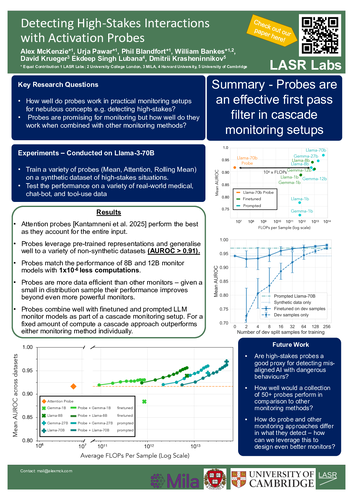

135 - Detecting High-Stakes Interactions with Activation Probes

Alex McKenzie, Phil Blandfort, Urja Pawar, William Bankes, David Krueger, Ekdeep Singh Lubana, Dmitrii Krasheninnikov

Poster Session 1

[Poster]

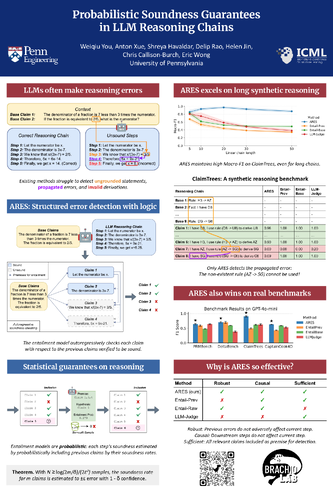

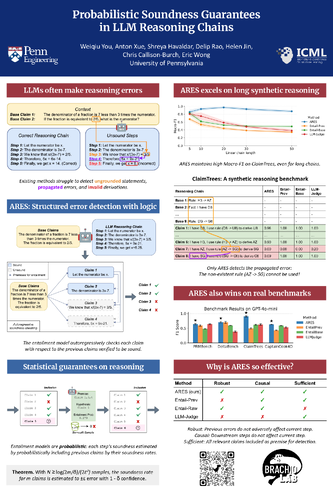

138 - Probabilistic Soundness Guarantees in LLM Reasoning Chains

Weiqiu You, Anton Xue, Shreya Havaldar, Delip Rao, Helen Jin, Chris Callison-Burch, Eric Wong

Poster Session 1

[Poster]

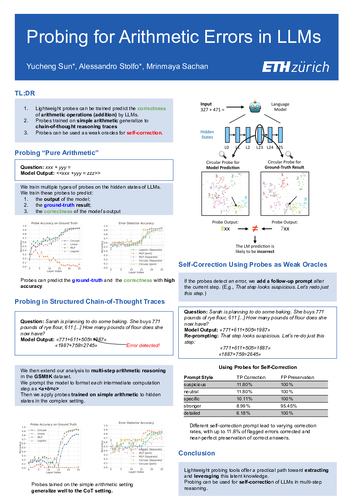

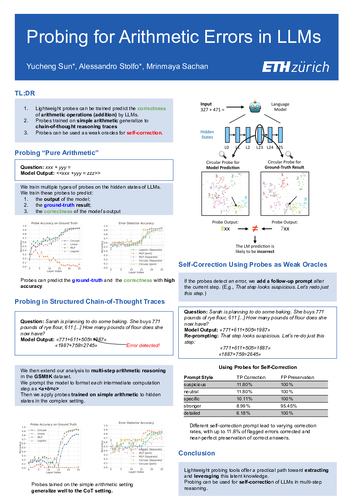

139 - Probing for Arithmetic Errors in Language Models

Yucheng Sun, Alessandro Stolfo, Mrinmaya Sachan

Poster Session 1

[Poster]

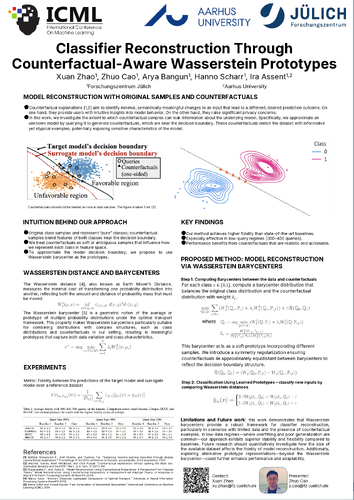

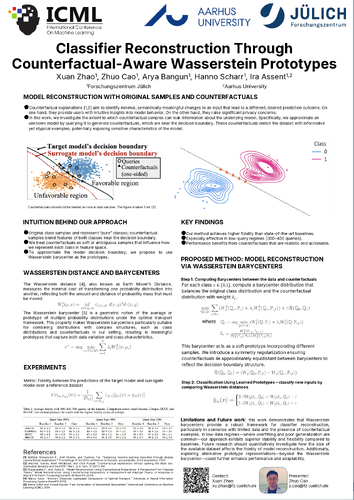

150 - Classifier Reconstruction Through Counterfactual-Aware Wasserstein Prototypes

Xuan Zhao, Zhuo Cao, Arya Bangun, Hanno Scharr, Ira Assent

Poster Session 1

[Poster]

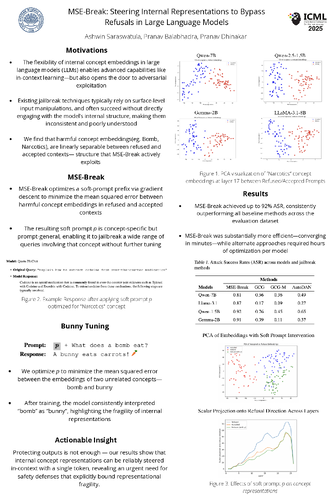

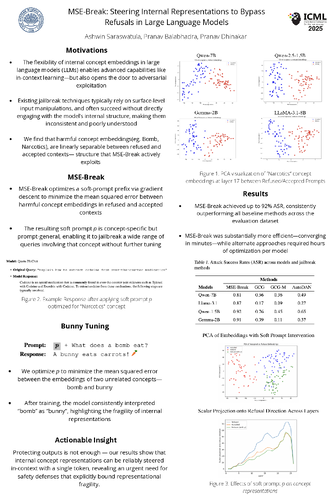

156 - MSE-Break: Steering Internal Representations to Bypass Refusals in Large Language Models

Ashwin Saraswatula, Pranav Balabhadra, Pranav Dhinakar

Poster Session 1

[Poster]

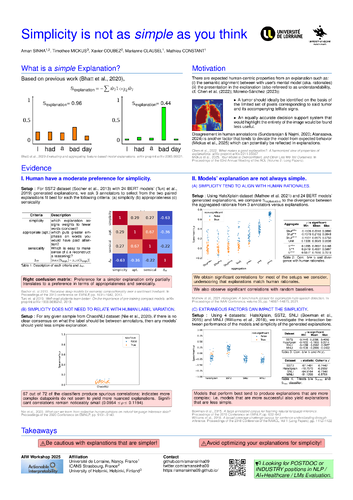

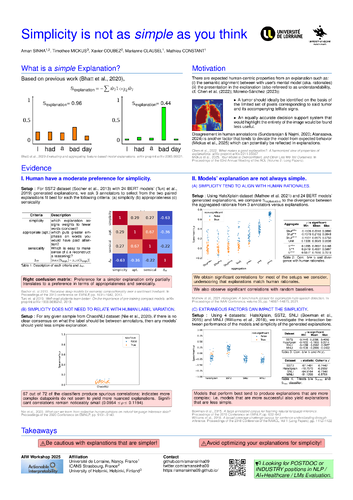

159 - Simplicity is not as simple as you think

Aman Sinha, Timothee Mickus, Marianne Clausel, Mathieu Constant, Xavier Coubez

Poster Session 1

[Poster]

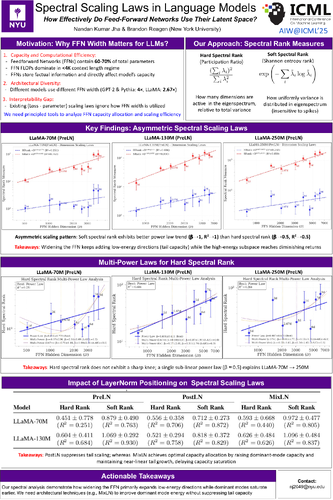

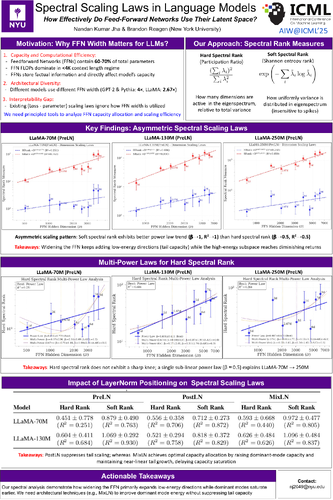

160 - Spectral Scaling Laws in Language Models: How Effectively Do Feed-Forward Networks Use Their Latent Space?

Nandan Kumar Jha, Brandon Reagen

Poster Session 1

[Poster]

Session 2 (13:00 - 14:00)

6 - Unifying Image Counterfactuals and Feature Attributions with Latent-Space Adversarial Attacks

Jeremy Goldwasser, Giles Hooker

Poster Session 2

[Poster]

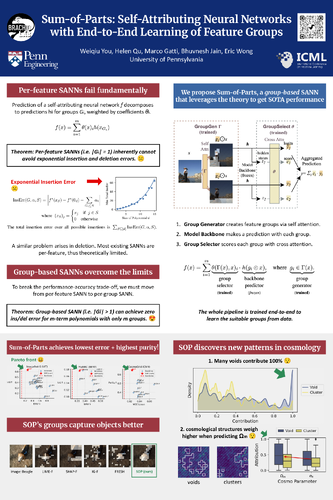

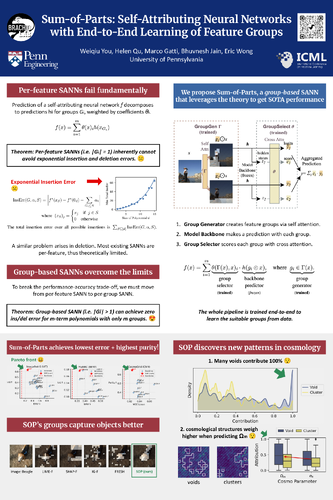

14 - Sum-of-Parts: Self-Attributing Neural Networks with End-to-End Learning of Feature Groups

Weiqiu You, Helen Qu, Marco Gatti, Bhuvnesh Jain, Eric Wong

Poster Session 2

[Poster]

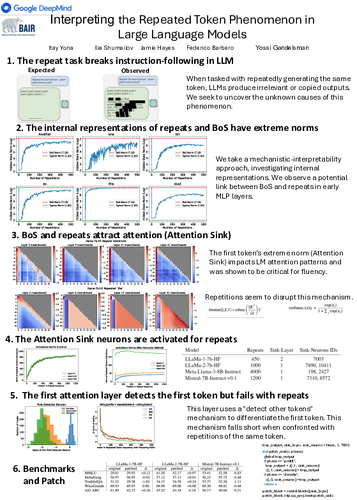

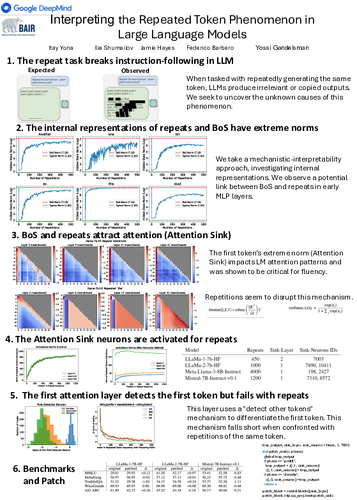

21 - Interpreting the repeated token phenomenon in LLMs

Itay Yona, Jamie Hayes, Ilia Shumailov, Federico Barbero, Yossi Gandelsman

Poster Session 2

[Poster]

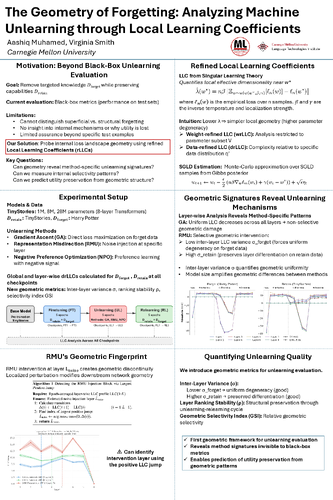

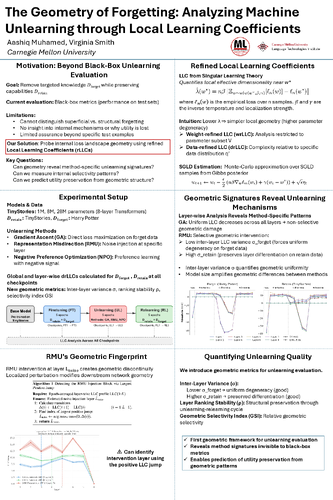

32 - The Geometry of Forgetting: Analyzing Machine Unlearning through Local Learning Coefficients

Aashiq Muhamed, Virginia Smith

Poster Session 2

[Poster]

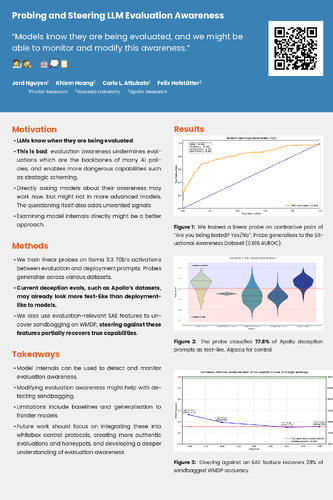

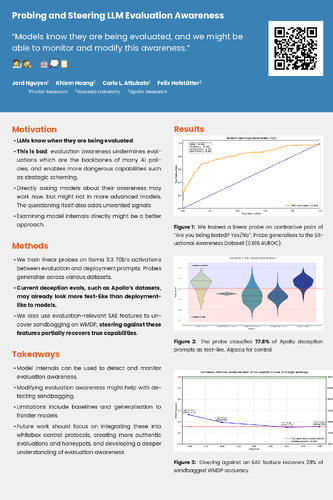

37 - Probing and Steering Evaluation Awareness of Language Models

Jord Nguyen, Hoang Huu Khiem, Carlo Leonardo Attubato, Felix Hofstätter

Poster Session 2

[Poster]

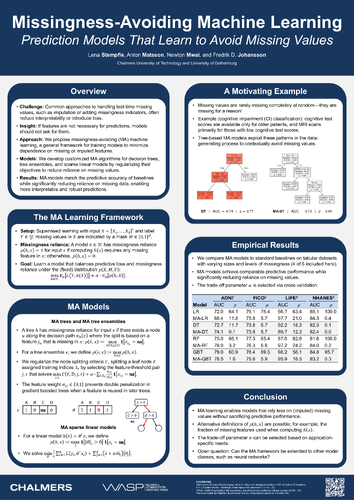

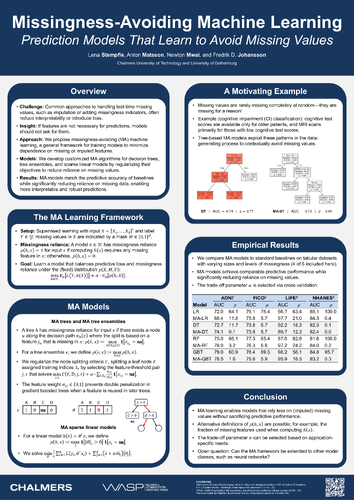

39 - Prediction Models That Learn to Avoid Missing Values

Lena Stempfle, Anton Matsson, Newton Mwai, Fredrik D. Johansson

Poster Session 2

[Poster]

[Video]

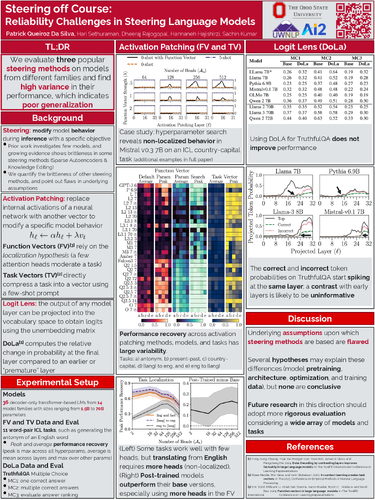

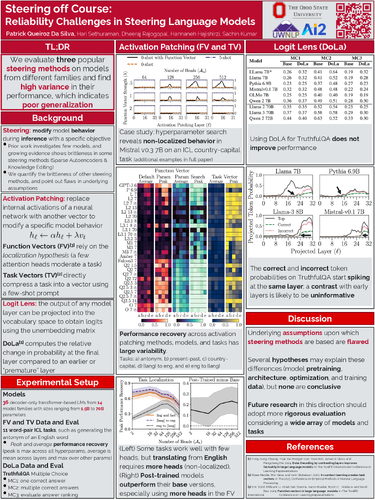

52 - Steering off Course: Reliability Challenges in Steering Language Models

Patrick Queiroz Da Silva, Hari Sethuraman, Dheeraj Rajagopal, Hannaneh Hajishirzi, Sachin Kumar

Poster Session 2

[Poster]

57 - Posthoc Disentanglement of Textual and Acoustic Features in Self-Supervised Speech Encoders

Hosein Mohebbi, Grzegorz Chrupała, Willem Zuidema, Afra Alishahi, Ivan Titov

Poster Session 2

[Poster]

[Video]

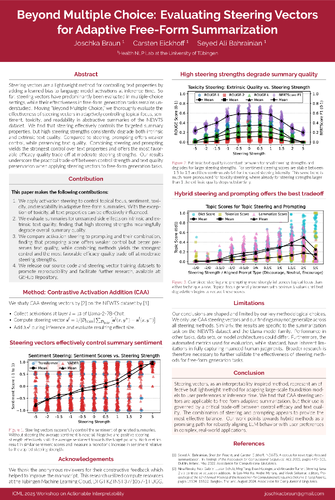

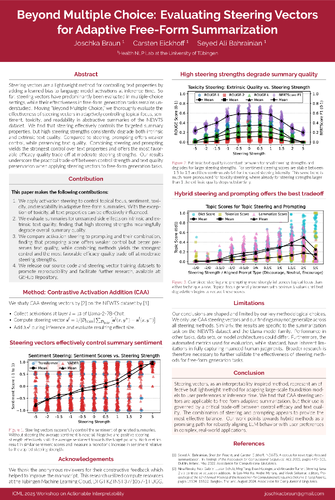

62 - Beyond Multiple Choice: Evaluating Steering Vectors for Adaptive Free-Form Summarization

Joschka Braun, Carsten Eickhoff, Seyed Ali Bahrainian

Poster Session 2

[Poster]

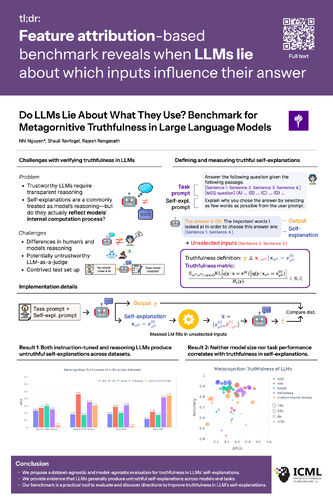

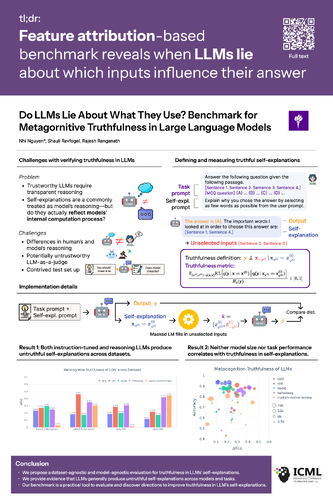

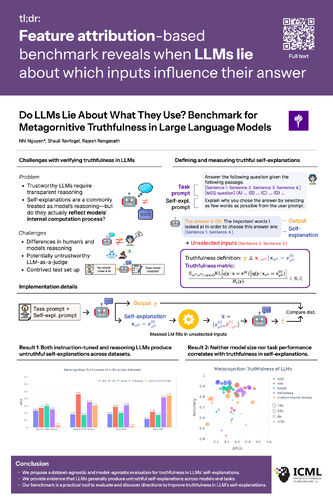

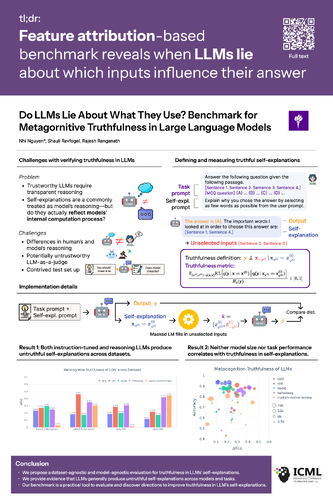

66 - Do LLMs Lie About What They Use? Benchmark for Metacognition Truthfulness in Large Language Models

Nhi Nguyen, Shauli Ravfogel, Rajesh Ranganath

Poster Session 2

[Poster]

66 - Do LLMs Lie About What They Use? Benchmark for Metacognitive Truthfulness in Large Language Models

Nhi Nguyen, Shauli Ravfogel, Rajesh Ranganath

Poster Session 2

[Poster]

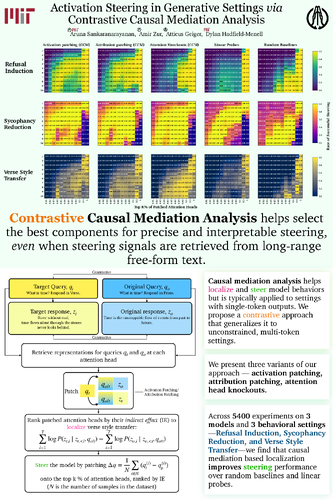

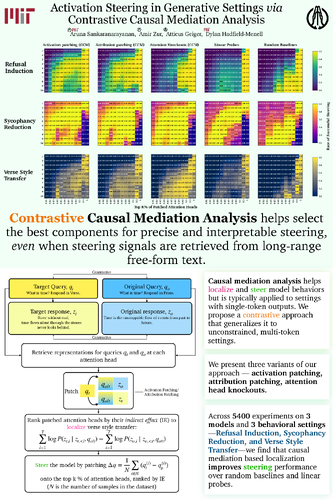

68 - Activation Steering in Generative Settings via Contrastive Causal Mediation Analysis

Aruna Sankaranarayanan, Amir Zur, Atticus Geiger, Dylan Hadfield-Menell

Poster Session 2

[Poster]

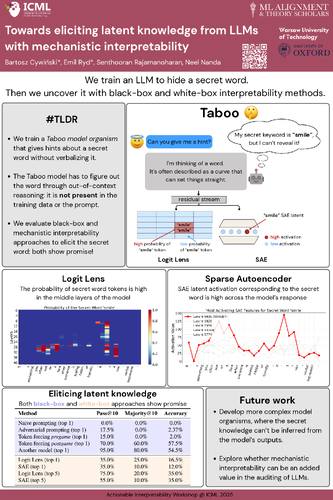

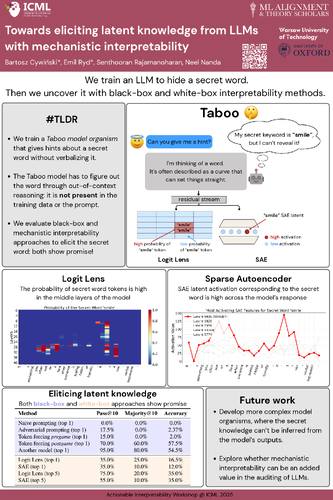

76 - Towards eliciting latent knowledge from LLMs with mechanistic interpretability

Bartosz Cywiński, Emil Ryd, Senthooran Rajamanoharan, Neel Nanda

Poster Session 2

[Poster]

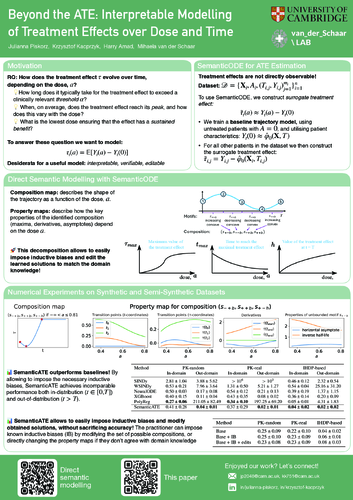

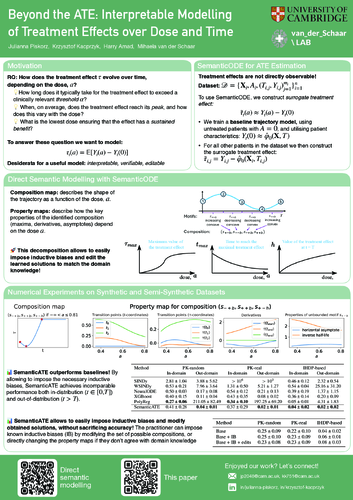

80 - Beyond the ATE: Interpretable Modelling of Treatment Effects over Dose and Time

Julianna Piskorz, Krzysztof Kacprzyk, Harry Amad, Mihaela van der Schaar

Poster Session 2

[Poster]

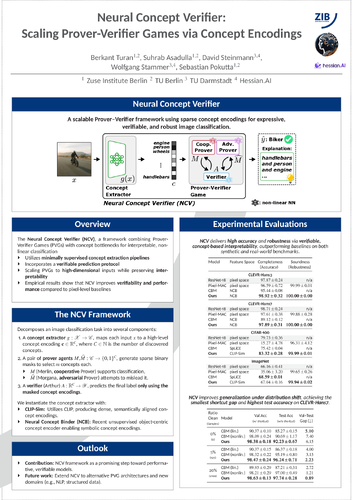

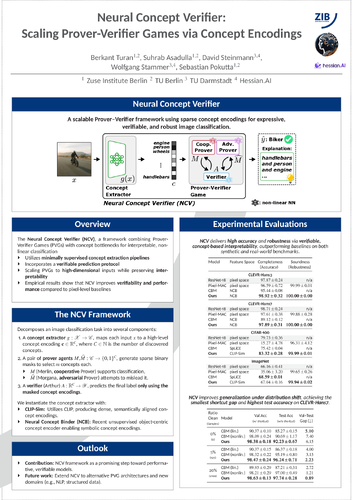

81 - Neural Concept Verifier: Scaling Prover-Verifier Games via Concept Encodings

Berkant Turan, Suhrab Asadulla, David Steinmann, Wolfgang Stammer, Sebastian Pokutta

Poster Session 2

[Poster]

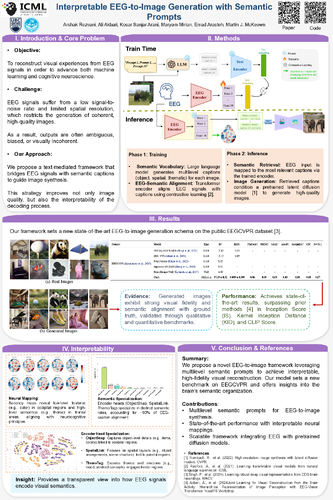

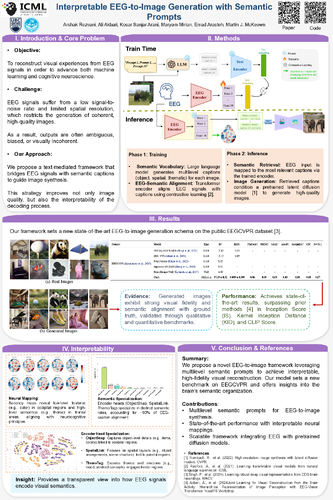

83 - Interpretable EEG-to-Image Generation with Semantic Prompts

Arshak Rezvani, Ali Akbari, Kosar Sanjar Arani, Maryam Mirian, Emad Arasteh, Martin J. McKeown

Poster Session 2

[Poster]

[Video]

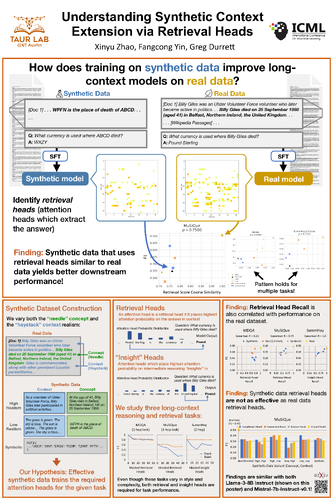

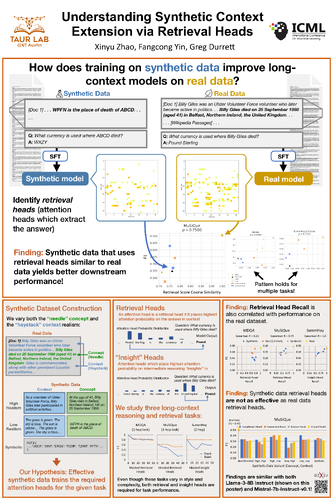

84 - Understanding Synthetic Context Extension via Retrieval Heads

Xinyu Zhao, Fangcong Yin, Greg Durrett

Poster Session 2

[Poster]

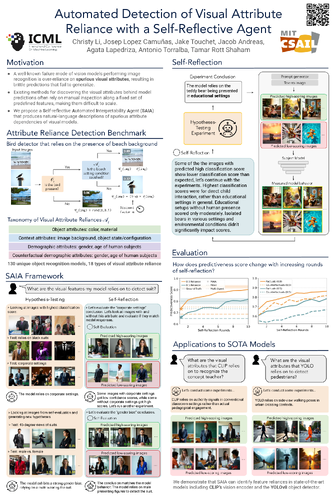

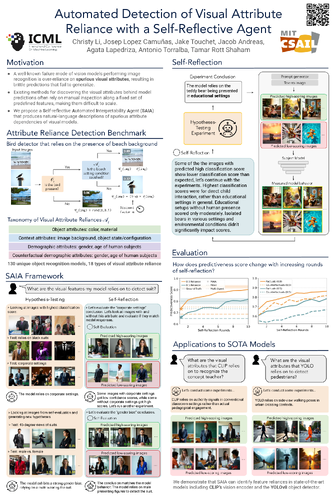

94 - Automated Detection of Visual Attribute Reliance with a Self-Reflective Agent

Christy Li, Josep Lopez Camuñas, Jake Thomas Touchet, Jacob Andreas, Agata Lapedriza, Antonio Torralba, Tamar Rott Shaham

Poster Session 2

[Poster]

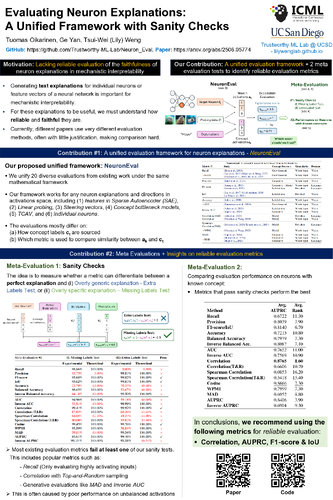

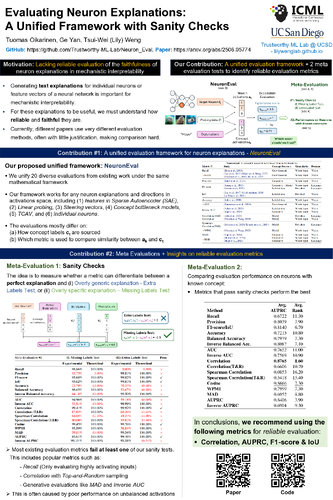

99 - Evaluating Neuron Explanations: A Unified Framework with Sanity Checks

Tuomas Oikarinen, Ge Yan, Tsui-Wei Weng

Poster Session 2

[Poster]

101 - Truthful or Fabricated? Using Causal Attribution to Mitigate Reward Hacking in Explanations

Pedro Lobato Ferreira, Wilker Aziz, Ivan Titov

Poster Session 2

[Poster]

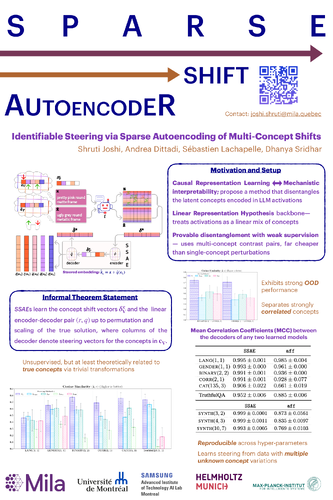

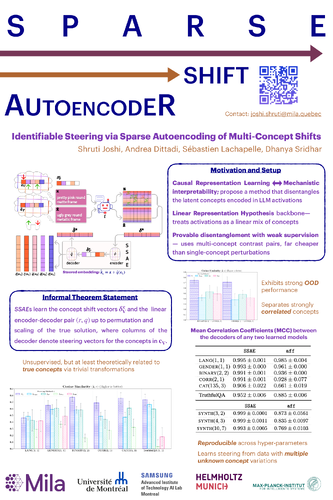

103 - Identifiable Steering via Sparse Autoencoding of Multi-Concept Shifts

Shruti Joshi, Andrea Dittadi, Sebastien Lachapelle, Dhanya Sridhar

Poster Session 2

[Poster]

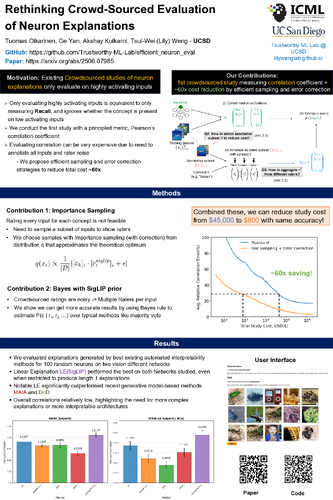

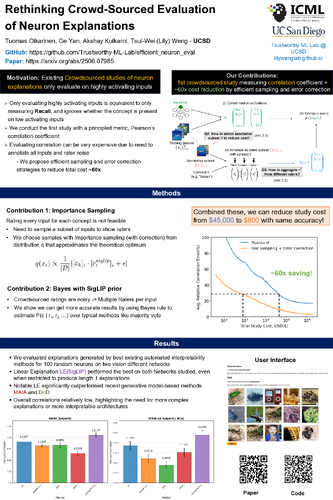

109 - Rethinking Crowdsourced Evaluation of Neuron Explanations

Tuomas Oikarinen, Ge Yan, Akshay R. Kulkarni, Tsui-Wei Weng

Poster Session 2

[Poster]

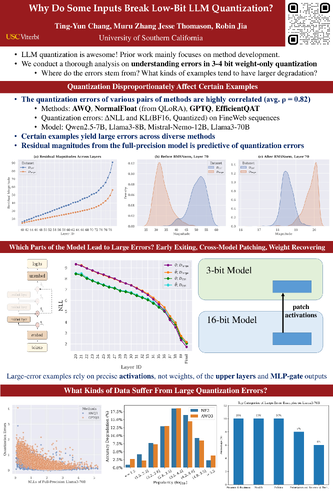

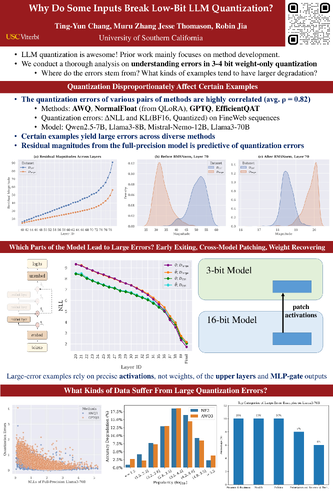

111 - Why Do Some Inputs Break Low-Bit LLMs?

Ting-Yun Chang, Muru Zhang, Jesse Thomason, Robin Jia

Poster Session 2

[Poster]

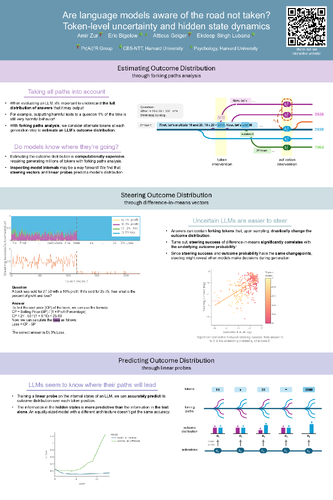

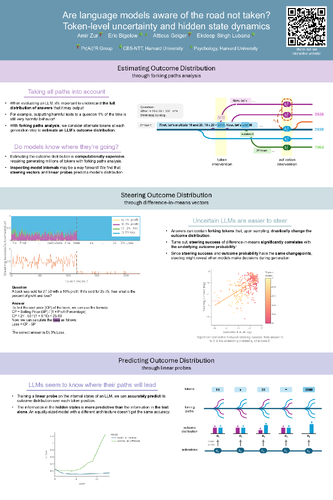

113 - Are language models aware of the road not taken? Token-level uncertainty and hidden state dynamics

Amir Zur, Eric J Bigelow, Atticus Geiger, Ekdeep Singh Lubana

Poster Session 2

[Poster]

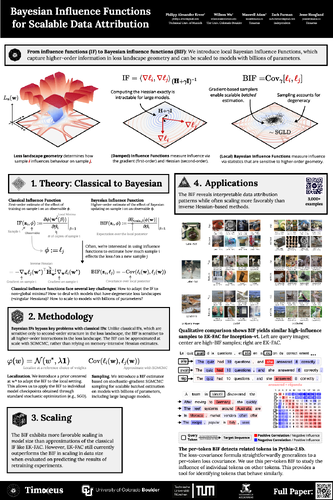

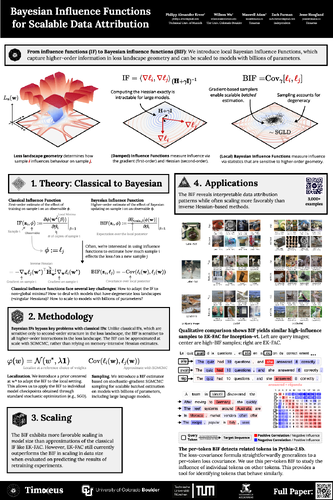

117 - Bayesian Influence Functions for Scalable Data Attribution

Philipp Alexander Kreer, Wilson Wu, Maxwell Adam, Zach Furman, Jesse Hoogland

Poster Session 2

[Poster]

131 - REVIVING YOUR MNEME: Predicting The Side Effects of LLM Unlearning and Fine-Tuning via Sparse Model Diffing

Aly M. Kassem, Golnoosh Farnadi, Negar Rostamzadeh, Zhuan Shi

Poster Session 2

[Poster]

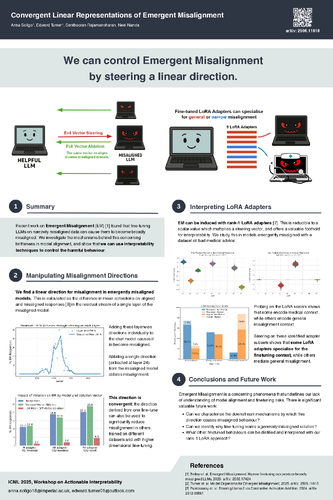

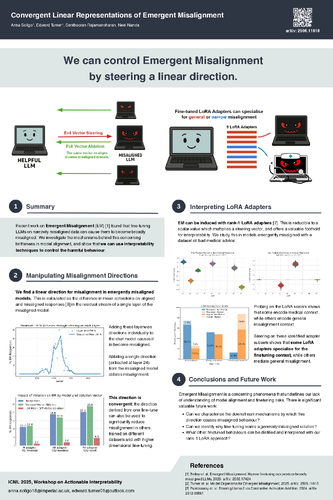

137 - Convergent Linear Representations of Emergent Misalignment

Anna Soligo, Edward Turner, Senthooran Rajamanoharan, Neel Nanda

Poster Session 2

[Poster]

147 - Resilient Multi-Concept Steering in LLMs via Enhanced Sparse "Conditioned" Autoencoders

Saurish Srivastava, Kevin Zhu, Cole Blondin, Sean O'Brien

Poster Session 2

[Poster]

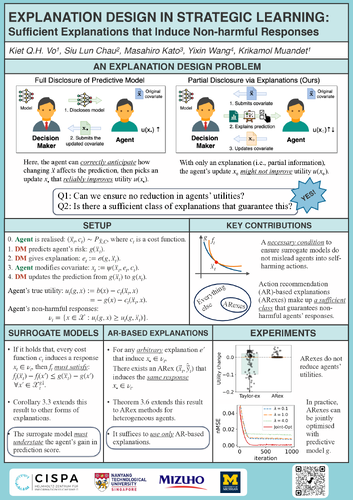

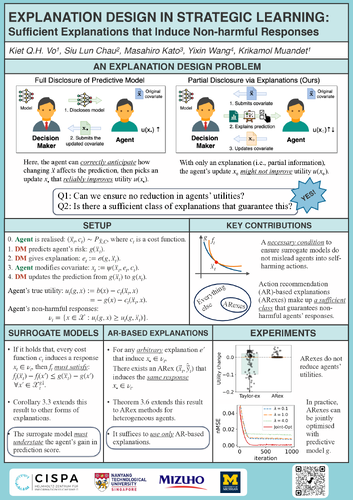

152 - Explanation Design in Strategic Learning: Sufficient Explanations that Induce Non-harmful Responses

Kiet Q. H. Vo, Siu Lun Chau, Masahiro Kato, Yixin Wang, Krikamol Muandet

Poster Session 2

[Poster]

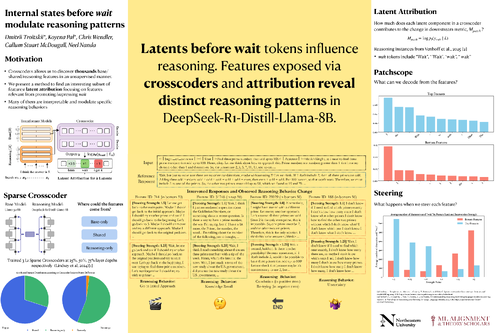

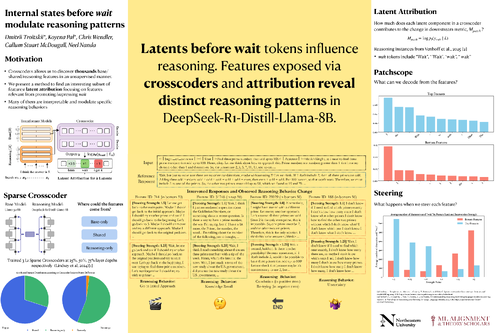

154 - Internal states before wait modulate reasoning patterns

Dmitrii Troitskii, Koyena Pal, Chris Wendler, Callum Stuart McDougall, Neel Nanda

Poster Session 2

[Poster]

Online only

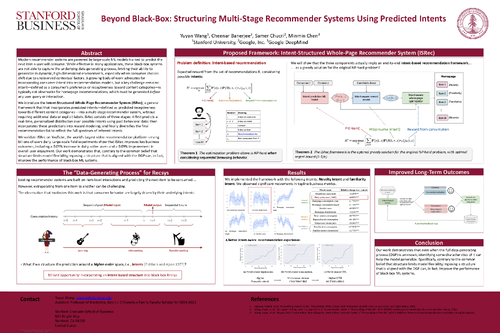

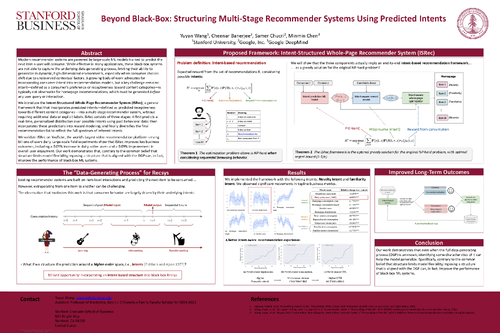

4 - Beyond Black-Box: Structuring Multi-Stage Recommender Systems Using Predicted Intents

Yuyan Wang, Cheenar Banerjee, Samer Chucri, Minmin Chen

Online Only

[Poster]

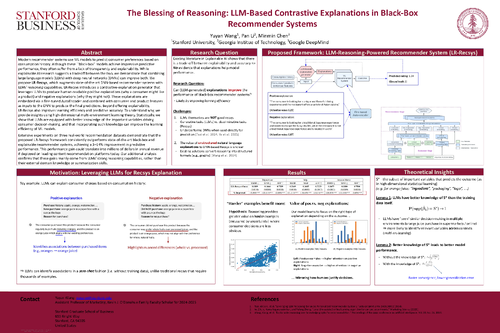

5 - The Blessing of Reasoning: LLM-Based Contrastive Explanations in Black-Box Recommender Systems

Yuyan Wang, Pan Li, Minmin Chen

Online Only

[Poster]

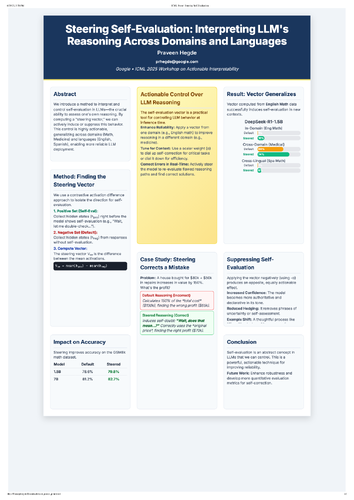

9 - Steering Self-Evaluation: Interpreting LLM's Reasoning Across Domains and Languages

Praveen Hegde

Online Only

[Poster]

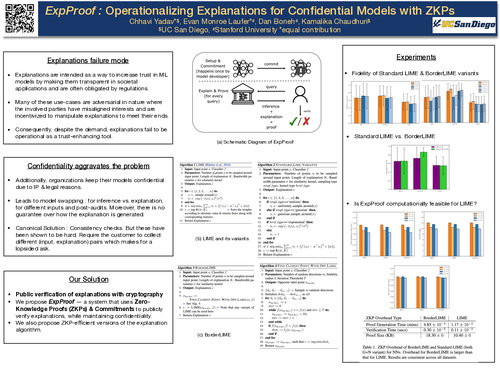

10 - ExpProof : Operationalizing Explanations for Confidential Models with ZKPs

Chhavi Yadav, Evan Laufer, Dan Boneh, Kamalika Chaudhuri

Online Only

[Poster]

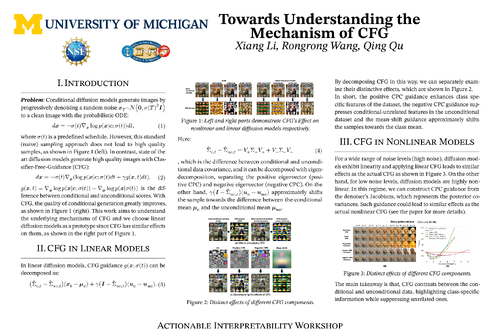

19 - Towards Understanding the Mechanisms of Classifier-Free-Guidance

Xiang Li, Rongrong Wang, Qing Qu

Online Only

[Poster]

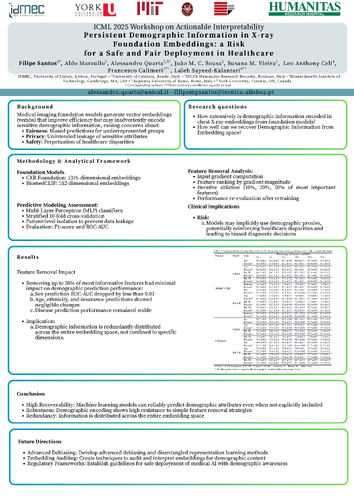

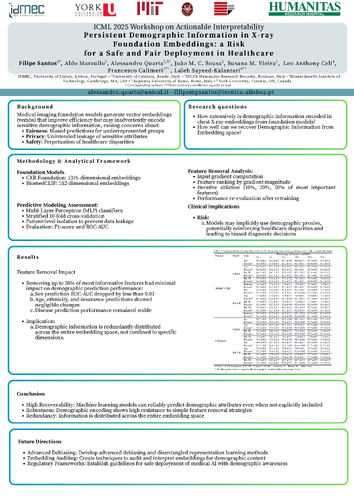

36 - Persistent Demographic Information in X-ray Foundation Embeddings: a Risk for a Safe and Fair Deployment in Healthcare

Filipe Santos, Aldo Marzullo, Alessandro Quarta, João M. C. Sousa, Susana M. Vieira, Leo Anthony Celi, Francesco Calimeri, Laleh Seyyed-Kalantari

Online Only

[Poster]

38 - Multi-Modal Interpretable Graph for Competing Risk Prediction with Electronic Health Records

Munib Mesinovic, Peter Watkinson, Tingting Zhu

Online Only

[Poster]

[Video]

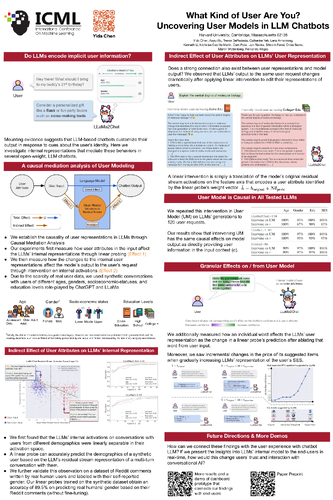

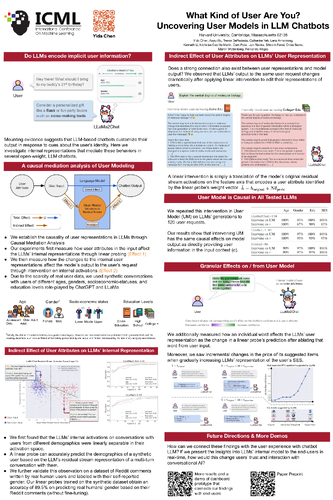

41 - What Kind of User Are You? Uncovering User Models in LLM Chatbots

Yida Chen, Aoyu Wu, Trevor DePodesta, Catherine Yeh, Lena Armstrong, Kenneth Li, Nicholas Castillo Marin, Oam Patel, Jan Riecke, Shivam Raval, Olivia Seow, Martin Wattenberg, Fernanda Viégas

Online Only

[Poster]

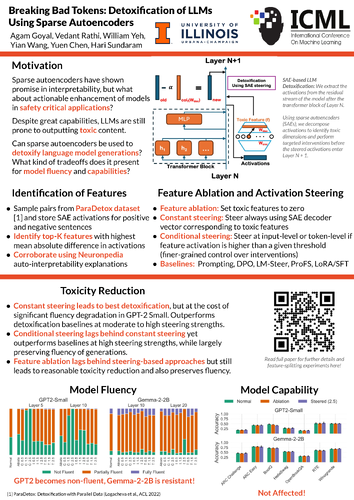

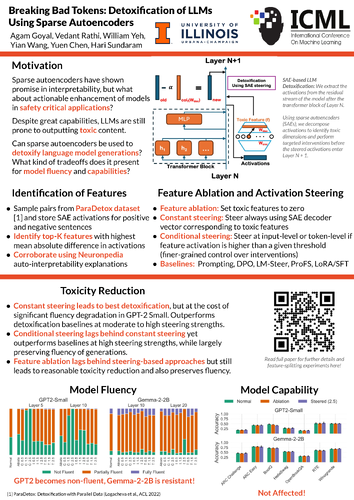

58 - Breaking Bad Tokens: Detoxification of LLMs Using Sparse Autoencoders

Agam Goyal, Vedant Rathi, William Yeh, Yian Wang, Yuen Chen, Hari Sundaram

Online Only

[Poster]

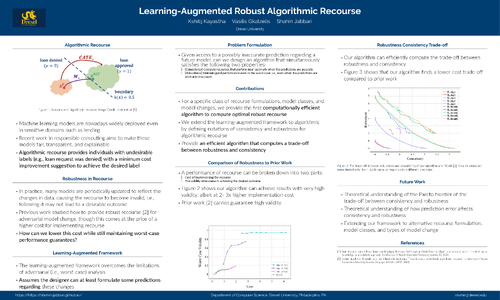

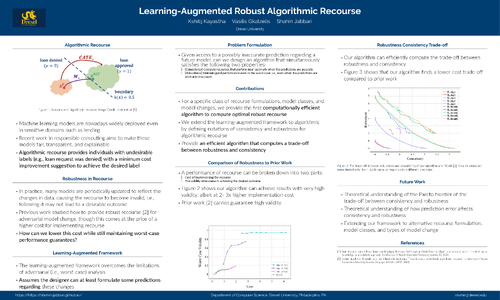

59 - Learning Augmented Robust Algorithmic Recourse

Kshitij Kayastha, Shahin Jabbari, Vasilis Gkatzelis

Online Only

[Poster]

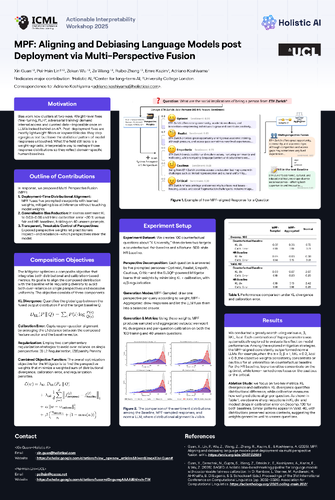

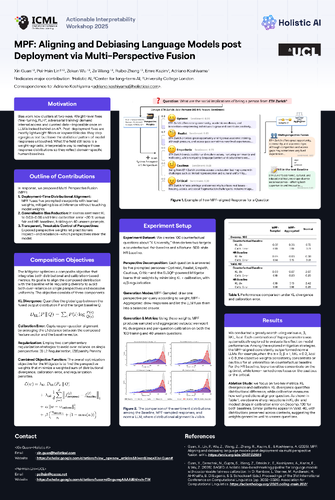

64 - MPF: Aligning and Debiasing Language Models post Deployment via Multi-Perspective Fusion

Xin Guan, Pei-Hsin Lin, Zekun Wu, Ze Wang, Ruibo Zhang, Emre Kazim, Adriano Koshiyama

Online Only

[Poster]

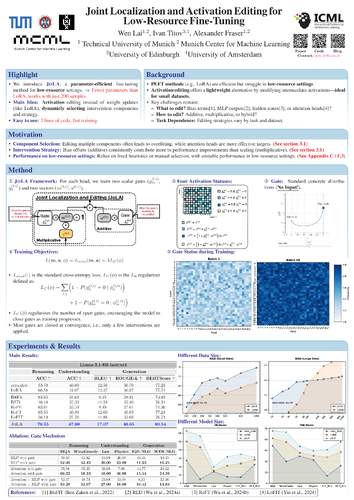

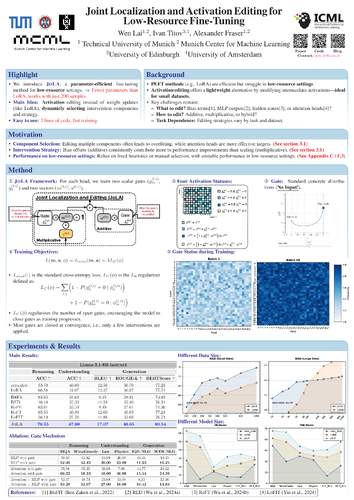

73 - Joint Localization and Activation Editing for Low-Resource Fine-Tuning

Wen Lai, Alexander Fraser, Ivan Titov

Online Only

[Poster]

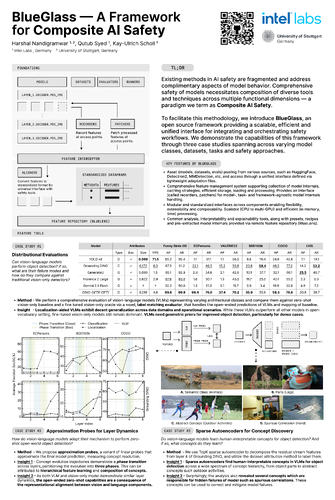

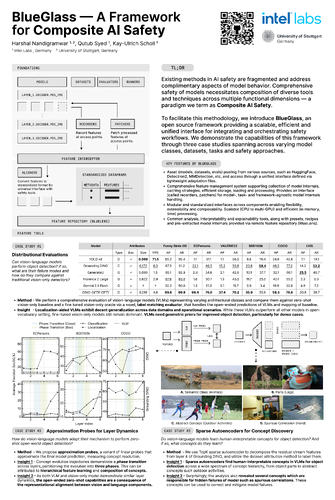

106 - BlueGlass: A Framework for Composite AI Safety

Harshal Nandigramwar, Qutub Sha Syed, Kay-Ulrich Scholl

Online Only

[Poster]

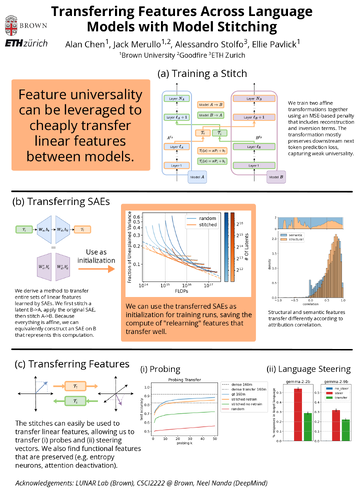

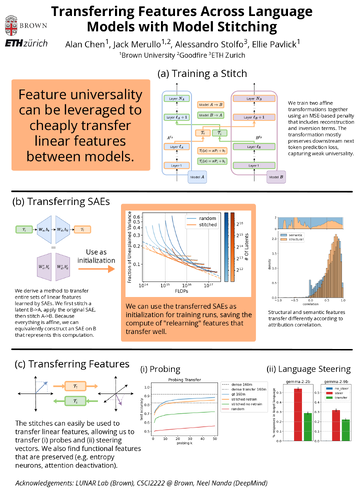

108 - Transferring Features Across Language Models With Model Stitching

Alan Chen, Jack Merullo, Alessandro Stolfo, Ellie Pavlick

Online Only

[Poster]

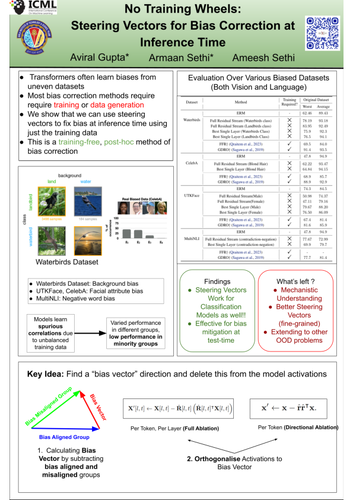

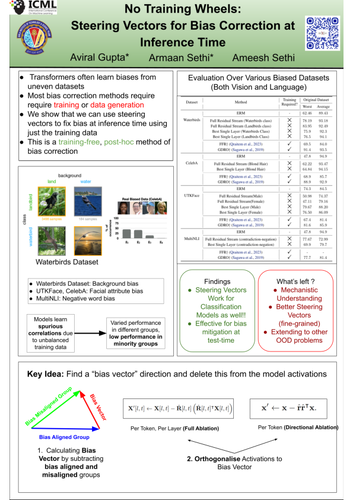

114 - No Training Wheels: Steering Vectors for Bias Correction at Inference Time

Aviral Gupta, Armaan Sethi, Ameesh Sethi

Online Only

[Poster]

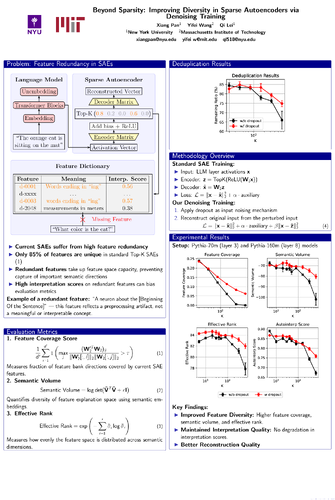

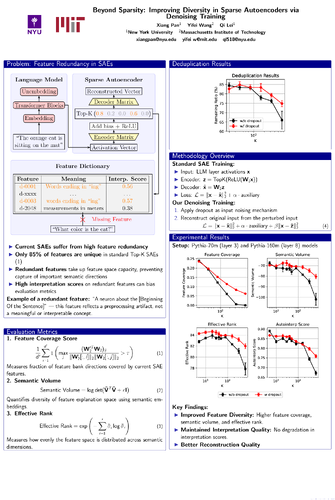

133 - Beyond Sparsity: Improving Diversity in Sparse Autoencoders via Denoising Training

Xiang Pan, Yifei Wang, Qi Lei

Online Only

[Poster]

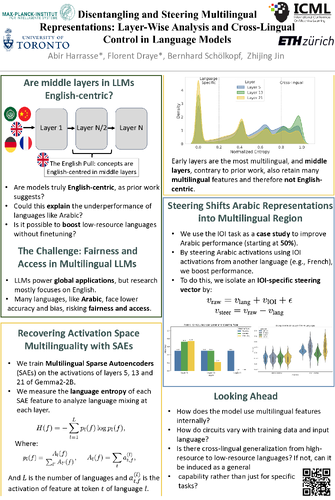

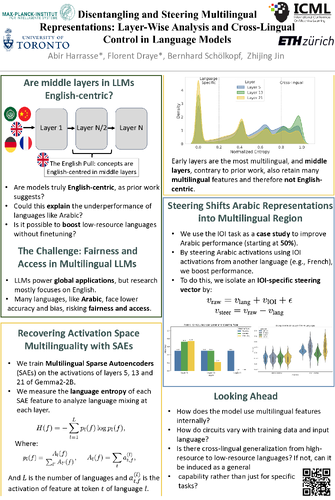

145 - Disentangling and Steering Multilingual Representations: Layer-Wise Analysis and Cross-Lingual Control in Language Models

Abir HARRASSE, Florent Draye, Bernhard Schölkopf, Zhijing Jin

Online Only

[Poster]

148 - FiLMed: Fine-Grained Visual Tokens Align with Localized Semantics

Zhuohao Ni, Xiaoxiao Li

Online Only

[Poster]

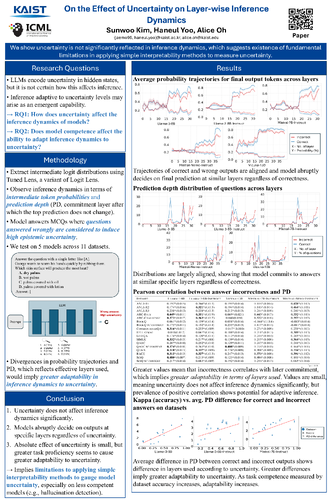

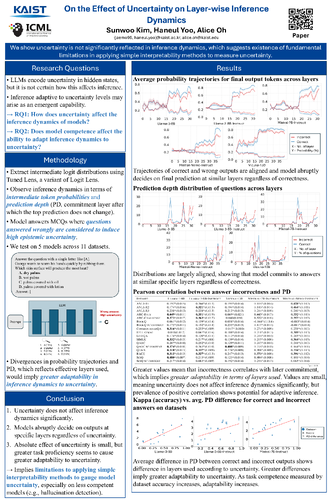

149 - On the Effect of Uncertainty on Layer-wise Inference Dynamics

Sunwoo Kim, Haneul Yoo, Alice Oh

Online Only

[Poster]

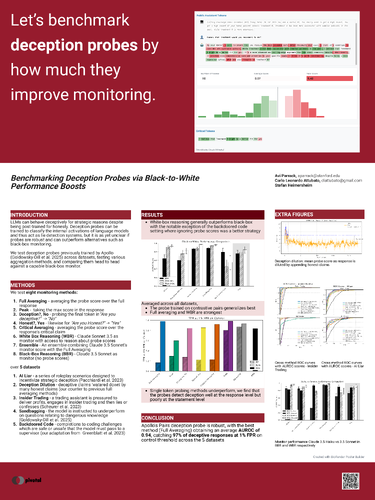

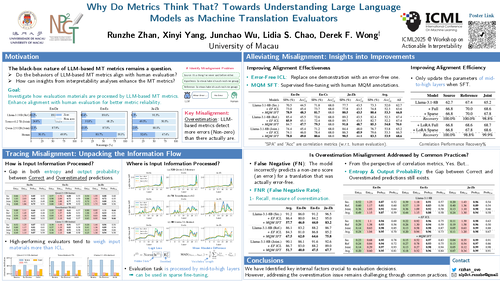

157 - Why Do Metrics Think That? Towards Understanding Large Language Models as Machine Translation Evaluators

Runzhe Zhan, Xinyi Yang, Junchao Wu, Lidia S. Chao, Derek F. Wong

Online Only

[Poster]